In recent times, event-based cameras have emerged as a focal point in the realms of computer vision and robotics, thanks to their distinct features and superiorities when compared to conventional frame-based cameras. These innovative sensors, including Prophesee and DVS (Dynamic Vision Sensor), adopt a fundamentally different operating principle. Instead of capturing images at a predetermined interval, they detect changes in the scene. This blog post aims to delve into the data formats utilized by event cameras, highlighting their advantages and exploring their potential uses.

To effectively manage the event data produced by cameras such as Prophesee and DVS, specific data formats have been devised. The most prevalent formats encompass:

AEDAT Format (iNivation Camera)

- Binary Format: While early versions of AEDAT may have been simpler and text-readable for ease of interpretation, the latest version (AEDAT 3.0) utilizes a binary format. This evolution addresses the need for efficient data storage and processing, crucial for handling the high data throughput characteristic of event-based vision sensors.

- Event Representation: Each event in the AEDAT format includes a timestamp, x and y coordinates, and polarity information. The binary encoding of these elements allows for precise temporal resolution and spatial localization of events, critical for applications requiring detailed motion analysis and temporal dynamics understanding.

- Efficiency and Scalability: The binary nature of the AEDAT format ensures that it is more compact and faster to process than a purely text-based format would be. This efficiency makes it suitable for real-time applications and managing large datasets generated in extended recordings or high-speed scenarios.

- Interoperability and Tooling: Despite being proprietary to iniVation’s technology, the AEDAT format benefits from a range of supporting tools and libraries for data processing, visualization, and conversion. This ecosystem facilitates its use in research and development, although users are somewhat dependent on iniVation’s infrastructure.

Reference: (PDF) Continuous-Time Trajectory Estimation for Event-based Vision Sensors (researchgate.net)

EVT Format (Prophesee Camera)

- Proprietary Binary Format: The EVT format is also a binary format, designed by Prophesee to optimize the recording and processing of event data. It encapsulates events in a compact, efficient manner, tailored to the high performance of Prophesee’s sensors.

- Data Aggregation and Metadata: One of the distinctive features of the EVT format is its approach to data aggregation, bundling multiple events into single records for efficiency. Additionally, it can include extended metadata for each event, such as exposure times, enhancing the depth of information available for analysis.

- Real-Time Processing and Large Datasets: The binary structure and data aggregation strategies contribute to the EVT format’s suitability for real-time processing and handling extensive event streams. These characteristics are essential for advanced applications in dynamic environments where rapid data interpretation is critical.

- Access and Security: The proprietary nature of the EVT format means that it is optimized for Prophesee’s ecosystem, potentially offering advanced features and security through obscurity. However, this also means that working with EVT files might require specialized tools provided by Prophesee, potentially limiting interoperability with third-party software compared to more open formats.

Reference: RAW File Format — Metavision SDK Docs 4.2.1 documentation (prophesee.ai)

Both AEDAT and EVT formats are binary and designed to efficiently handle the voluminous and high-speed data generated by event-based vision sensors. They share the goal of capturing detailed temporal and spatial information critical for dynamic scene analysis and motion detection.

The main differences lie in their proprietary nature and the specific features they support, such as extended metadata in the EVT format and the broader tooling and support ecosystem around the AEDAT format. Users must consider these factors—along with the specific requirements of their applications, such as the need for real-time processing, data richness, and interoperability—when choosing between these formats for their event-based vision projects.

Event Data Processing

Regardless of the data format used, event data processing is a critical step to extract meaningful information from the event stream. The processing pipeline typically involves steps like:

- Event Accumulation: This step involves aggregating events within specified time windows, typically on the order of milliseconds, to generate event frames. Unlike traditional video frames, these event frames represent changes in intensity for each pixel, capturing the dynamic aspects of the scene. Advanced accumulation techniques might also weigh events by their recency, emphasizing more recent changes to better capture the temporal dynamics.

- Noise Filtering: Prior to or during accumulation, it is crucial to apply noise filtering techniques to remove spurious events caused by sensor noise, ensuring that only meaningful data is processed. Techniques such as temporal filtering, where only events that occur within a certain timeframe of other events are kept, or spatial filtering, which removes isolated events not supported by neighboring activity, are common.

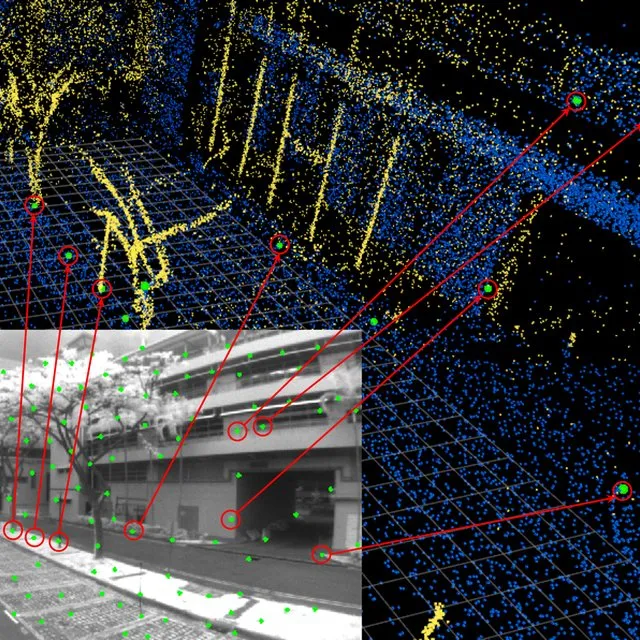

- Motion Estimation: This involves analyzing the event frames to deduce the motion of objects across the scene. Given the high temporal resolution of event-based cameras, they are particularly adept at detecting rapid movements, providing a significant advantage in applications requiring real-time responsiveness, such as autonomous vehicle navigation, drone flight control, and dynamic scene analysis. Algorithms for motion estimation may include optical flow techniques tailored for event data, which calculate the movement of pixels between event frames.

- Event Reconstruction: In scenarios where integration with existing computer vision frameworks is needed, reconstructing a conventional frame-like representation from the event stream becomes necessary. This process can involve generating synthetic frames that simulate traditional camera outputs, enabling the application of standard computer vision algorithms for tasks like object recognition or scene segmentation. Reconstruction methods might employ complex models to interpolate the intensity values between events, thereby approximating a full visual scene.

- 3D Reconstruction and Depth Estimation: By leveraging the high temporal resolution and motion sensitivity of event cameras, techniques for 3D reconstruction and depth estimation can extract spatial information about the scene. This is particularly useful in robotics and augmented reality (AR) applications, where understanding the geometric structure of the environment is crucial.

- Time-Slicing and High-Dynamic Range (HDR) Imaging: Event cameras inherently capture the scene in high dynamic range, with each pixel operating independently to detect intensity changes. Processing pipelines can exploit this to produce HDR images or to analyze the scene at different time slices, providing insights into the luminance and motion not achievable with traditional cameras.

- Learning-Based Approaches: Recently, machine learning models, especially those based on deep neural networks, have been applied to event data for tasks such as pattern recognition, anomaly detection, and predictive modeling. These approaches can learn complex representations of the event stream, enabling sophisticated interpretation and decision-making based on the temporal patterns of the events.

Conclusion

Event-based cameras, exemplified by Prophesee and DVS, have emerged as a ground-breaking technology in the field of computer vision. With their unique data formats and advantages, they offer tremendous potential for various applications, from robotics and surveillance to augmented reality. Embracing event-based cameras could revolutionize the way we perceive and interact with the visual world, paving the way for more efficient and intelligent vision systems in the future.